INSUBCONTINENT EXCLUSIVE:

If you haven&t taken part in the yanny/laurel controversy over the last couple of days, allow me to sincerely congratulate you

The viral speech synth clip has met the AI hype train and the result is, like everything in this mortal world, disappointing.

Sonix, a

company that produces AI-based speech recognition software, ran the ambiguous sound clip through Google, Amazon and Watson transcription

tools, and of course its own.

Google and Sonix managed to get it on the first try — it &laurel,& by the way

Laurel.

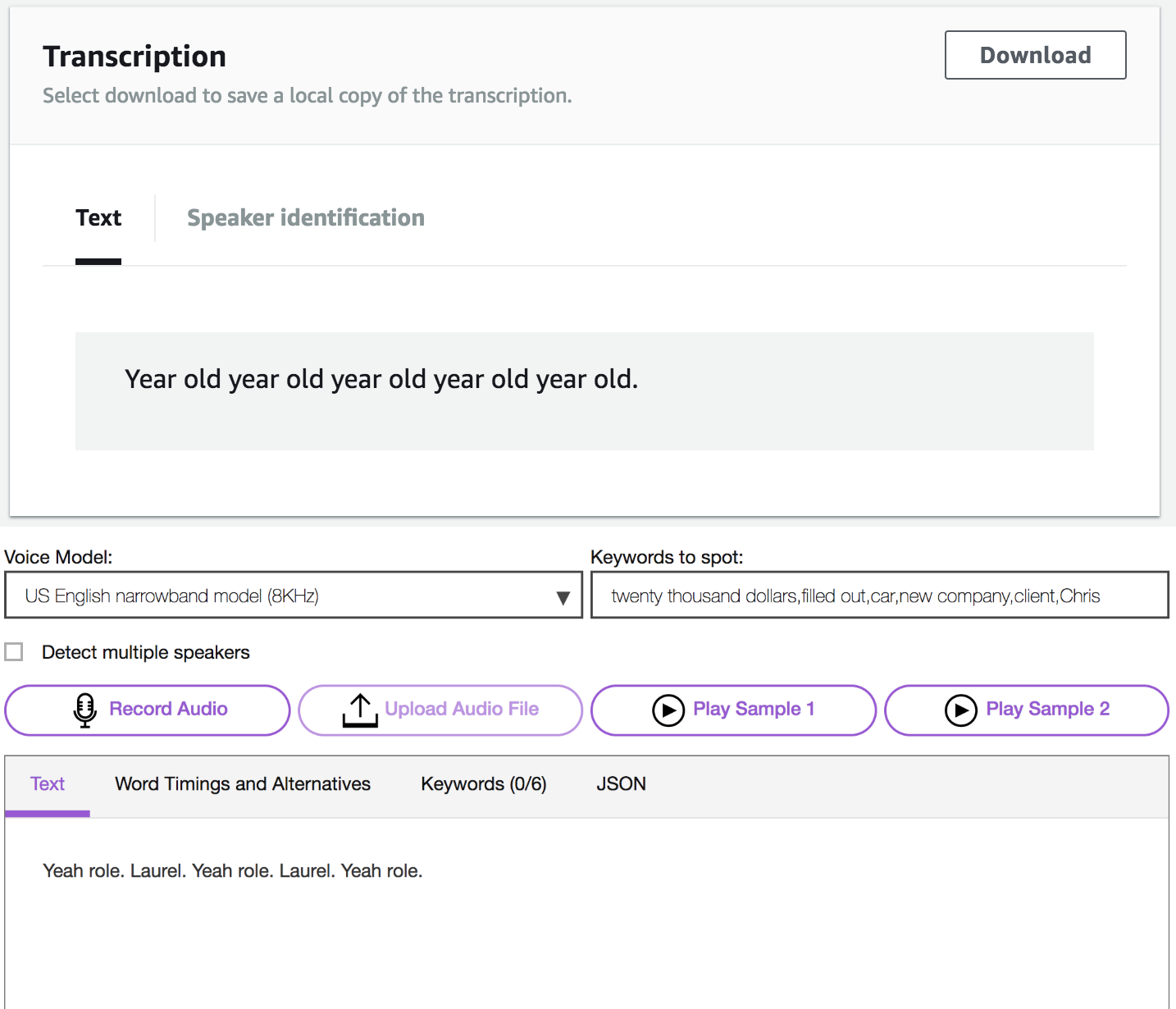

But Amazon stumbled, repeatedly producing &year old& as its best guess for what the robotic voice was saying

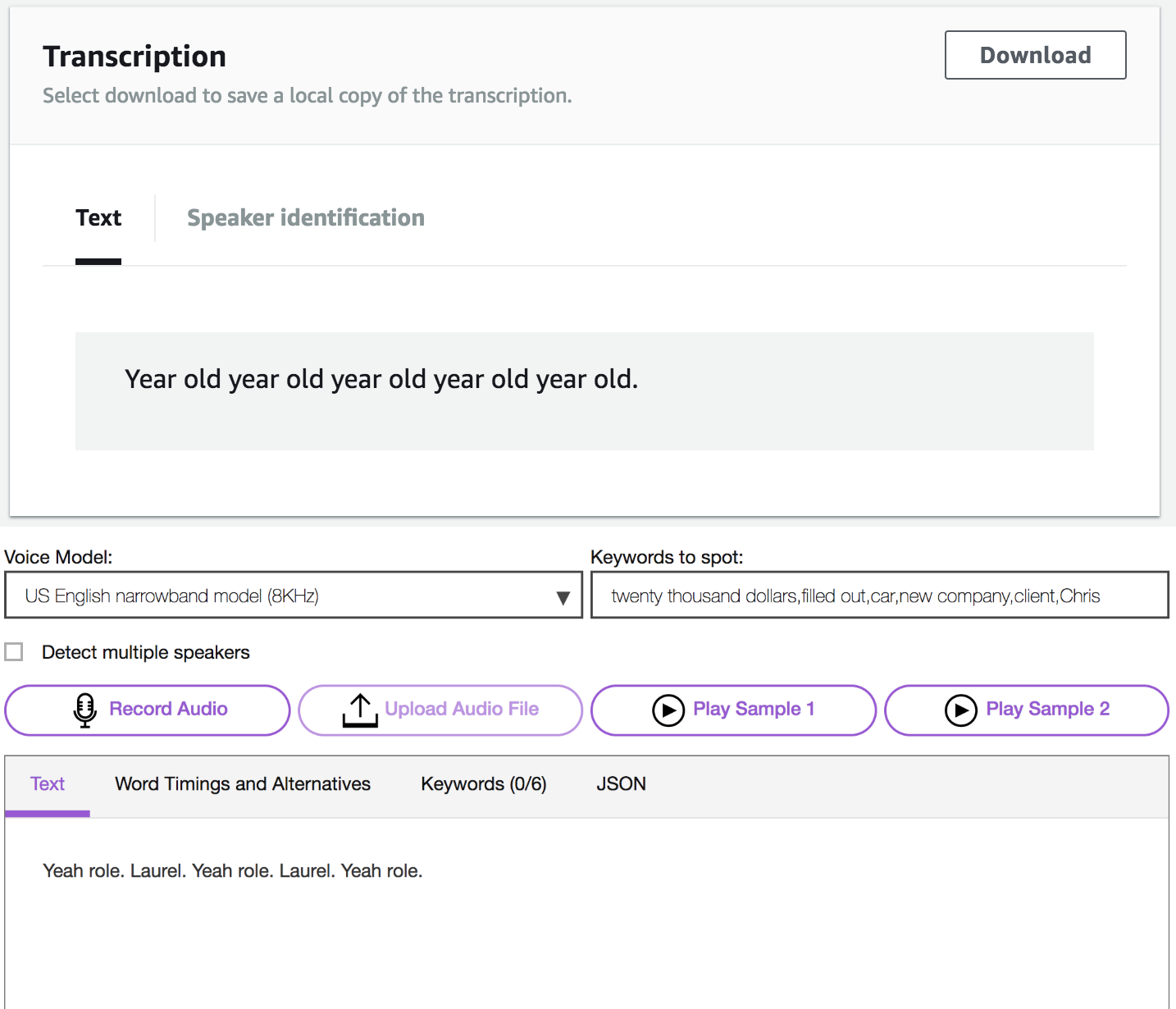

IBM Watson, amazingly, got it only half the time, alternating between hearing &yeah role& and &laurel.& So in a way, it the most human of

them all.

Top: Amazon; bottom: IBM.

Sonix CEO Jamie Sutherland told me in an email that he can&t really comment on the mixed success of the

other models, not having access to them.

&As you can imagine the human voice is complex and there are so many variations of volume, cadence,

accent, and frequency,& he wrote

&The reality is that different companies may be optimizing for different use cases, so the results may vary

It is challenging for a speech recognition model to accommodate for everything.&

My guess as an ignorant onlooker is it may have something

to do with the frequencies the models have been trained to prioritize

Sounds reasonable enough!

It really an absurd endeavor to appeal to a system based on our own hearing and cognition to make an authoritative

judgement in a matter on which our hearing and cognition are demonstrably lacking