INSUBCONTINENT EXCLUSIVE:

Osonde Osoba

Contributor

Share on Twitter

Osonde Osoba is an engineer at the nonprofit,

nonpartisan RAND Corporation and a member of the faculty of the Pardee RAND Graduate School.

As a teenager in Nigeria, I tried to build

an artificial intelligence system

I was inspired by the same dream that motivated the pioneers in the field: That we could create an intelligence of pure logic and

objectivity that would free humanity from human error and human foibles.

I was working with weak computer systems and intermittent

electricity, and needless to say my AI project failed.Eighteen years latermdash; as an engineer researching artificial intelligence, privacy

and machine-learning algorithms — I&m seeing that so far, the premise that AI can free us from subjectivity or bias is also disappointing

We are creatingintelligence in our own image

And that not a compliment.

Researchers have known forawhilethat purportedly neutral algorithms can mirror or even accentuate racial, gender

and other biases lurking in the data they are fed

Internet searches on names that are more often identified as belonging to black people were found to prompt search engines to generate ads

Algorithms used for job-searching were more likely to suggest higher-paying jobs to male searchers than female

Algorithms used in criminal justice also displayed bias.

Five years later, expunging algorithmic bias is turning out to be a tough problem

It takes careful work to comb through millions of sub-decisions to figure out why the algorithm reached the conclusion it did

And even when that is possible, it is not always clear which sub-decisions are the culprits.

Yet applications of these powerful technologies

are advancing faster than the flaws can be addressed.

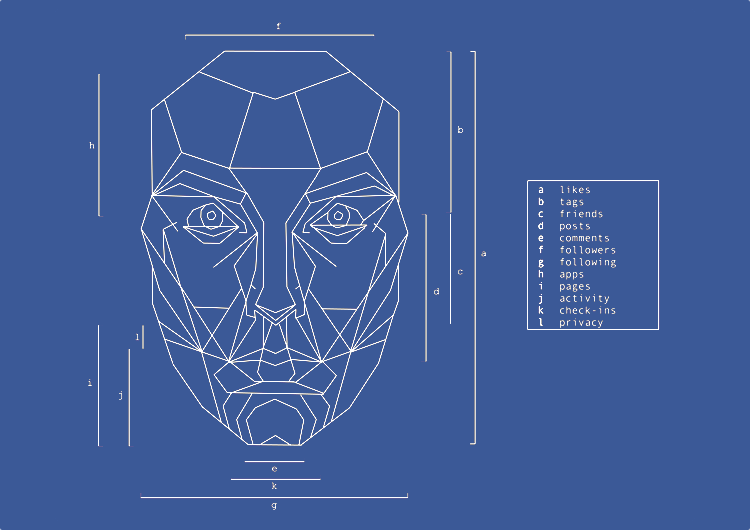

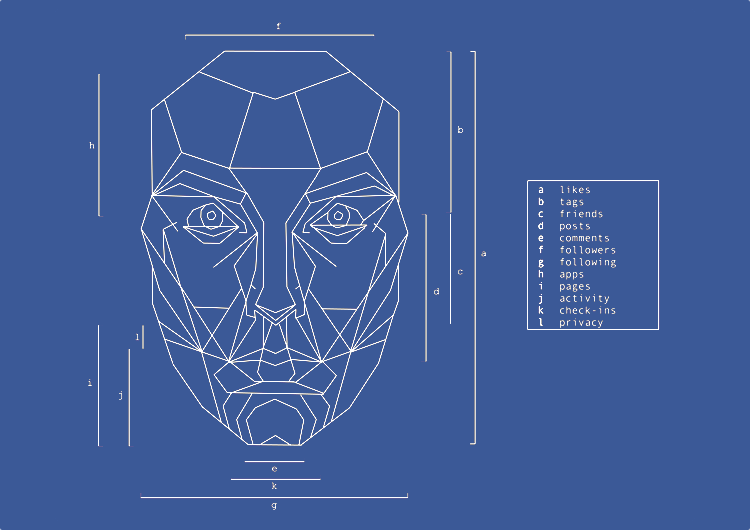

Recent researchunderscores this machine bias, showing that commercial

facial-recognition systems excel at identifying light-skinned males, with an error rate of less than 1 percent

But if you&re a dark-skinned female, the chance you&ll be misidentified rises to almost 35 percent.

AI systems are often only as intelligent

— and as fair — as the data used to train them

They use the patterns in the data they have been fed and apply them consistently to make future decisions

Consider an AI tasked with sorting the best nurses for a hospital to hire

If the AI has been fed historical data — profiles of excellent nurses who have mostly been female — it will tend to judge female

candidates to be better fits

Algorithms need to be carefully designed to account for historical biases.

Occasionally, AI systems get food poisoning

The most famous case wasWatson, the AI that first defeated humans in 2011 on the television game show Jeopardy

Watson masters at IBM needed to teach it language, including American slang, so they fed it the contents of the online Urban Dictionary

But after ingesting that colorful linguistic meal, Watson developed a swearing habit

It began to punctuate its responses with four-letter words.

We have to be careful what we feed our algorithms

Belatedly, companies now understand that they can''t train facial-recognition technology by mainly using photos of white men

But better training data alone won''t solve the underlying problem of making algorithms achieve fairness.

Algorithms can already tell you

what you might want to read, who you might want to date and where you might find work

When they are able to advise on who gets hired, who receives a loan or the length of a prison sentence, AI will have to be made more

transparent — and more accountable and respectful of society values and norms.

Accountability begins with human oversight when AI is

making sensitive decisions

In an unusual move,Microsoft president Brad Smithrecently called for the United States government to consider requiring human oversight of

facial-recognition technologies.

The next step is to disclose when humans are subject to decisions made by AI

Top-down government regulation may not be a feasible or desirable fix for algorithmic bias

But processes can be created that would allow people to appeal machine-made decisions — by appealing to humans

The EU newGeneral Data Protection Regulationestablishes the right for individuals to know and challenge automated decisions.

Today people

who have been misidentified — whether in an airport or an employment data base — have no recourse

They might have been knowingly photographed for a driver license, or covertly filmed by a surveillance camera (which has a higher error

They cannot know where their image is stored, whether it has been sold or who can access it

They have no way of knowing whether they have been harmed by erroneous data or unfair decisions.

Minorities are already disadvantaged by

such immature technologies, and the burden they bear for the improved security of society at large is both inequitable and uncompensated

Engineers alone will not be able to address this

An AI system is like a very smart child just beginning to understand the complexities of discrimination.

To realize the dream I had as a

teenager, of an AI that can free humans from bias instead of reinforcing bias, will require a range of experts and regulators to think more

deeply not only about what AI can do, but what it should do — and then teach it how.